The Dual Impact of Artificial Intelligence on Student Mental Health and Wellbeing

The ability of AI to provide 24/7, anonymous, and non-judgmental support can normalize help-seeking behaviors and proactively identify at-risk students who might otherwise remain unaddressed. This represents a fundamental shift towards a more proactive and preventative mental health culture within educational environments.

The escalating demand for mental health support among students, coupled with existing resource limitations, positions Artificial Intelligence (AI) as a potentially transformative solution. AI offers unprecedented accessibility, affordability, and capabilities for early detection, particularly for addressing surface-level stress and organizational challenges. However, critical concerns persist regarding AI's current limitations in empathy, trust, and adaptability, as directly articulated by students. These limitations underscore a fundamental human-AI gap that current technology cannot yet fully bridge.

The responsible deployment of AI in this sensitive domain necessitates robust ethical and legal frameworks, including stringent data privacy protocols (e.g., FERPA, HIPAA, GDPR, COPPA) and proactive measures to mitigate algorithmic bias. A notable trust deficit arises from privacy concerns and the potential for AI to inadvertently amplify existing societal biases, demanding careful consideration. Current research highlights the nascent stage of empirical studies on AI's long-term impact on student well-being, emphasizing the urgent need for further rigorous investigation into phenomena such as digital fatigue, technostress, and social isolation. Successful integration of AI in student mental health support requires a human-centered approach, adherence to comprehensive ethical guidelines, and widespread AI literacy for all stakeholders. This balanced strategy aims for AI to serve as a force multiplier for human professionals, rather than a problematic substitute, maximizing benefits while minimizing inherent risks.

The Evolving Landscape of Student Mental Health and AI

The global mental health sector faces a critical juncture, marked by a significant increase in demand for services and an urgent need for innovative solutions to enhance psychotherapy and improve care delivery. This growing pressure directly impacts the student population, which navigates a complex array of academic, social, and personal stressors that frequently contribute to mental health challenges. In response, artificial intelligence-driven digital interventions are emerging as a promising avenue. These interventions encompass a broad spectrum of software systems and mobile applications that embed AI techniques to deliver, support, or evaluate mental health services, ranging from basic rule-based chatbots to sophisticated multi-turn dialogue systems.

The increasing integration of AI into higher education is fundamentally reshaping how students engage with their academic pursuits and personal lives, with AI tools becoming indispensable for various aspects of learning, communication, and even entertainment. This pervasive presence of AI in students' daily routines means that its influence on mental health extends far beyond dedicated mental health applications. It encompasses interactions with AI-powered learning platforms, social media algorithms, and entertainment tools, creating a digital environment where AI's impact is holistic and multifaceted. Consequently, a comprehensive understanding of AI's effects on student well-being must adopt a broad perspective, acknowledging both the potential benefits and harms across the entire digital ecosystem students inhabit.

The current landscape reveals that AI is not merely a technological enhancement but a critical tool to bridge existing capacity gaps within often-overwhelmed traditional mental health services. The persistent challenge of rising demand, coupled with limited resources, frequently results in long wait times and restricted access to care. In this context, AI offers the potential to create new, accessible pathways to support that might otherwise be unavailable to a significant portion of the student population. This perspective underscores that the ethical and practical considerations of AI in student mental health are not solely about optimizing existing services but about forging innovative avenues for care.

Despite this burgeoning integration and considerable interest, the overall impact of AI on student well-being remains largely underexplored. There is a notable scarcity of rigorous experimental studies in this crucial area, particularly concerning long-term effects. This critical knowledge gap highlights an urgent need for increased funding and prioritization of research. Without robust empirical evidence, educational institutions and policymakers are integrating AI into student environments with limited understanding of its long-term consequences. Such research is essential to provide the necessary evidence base for developing effective and ethically sound implementation strategies, ensuring that technological advancements are guided by data rather than solely by anecdotal evidence or commercial claims.

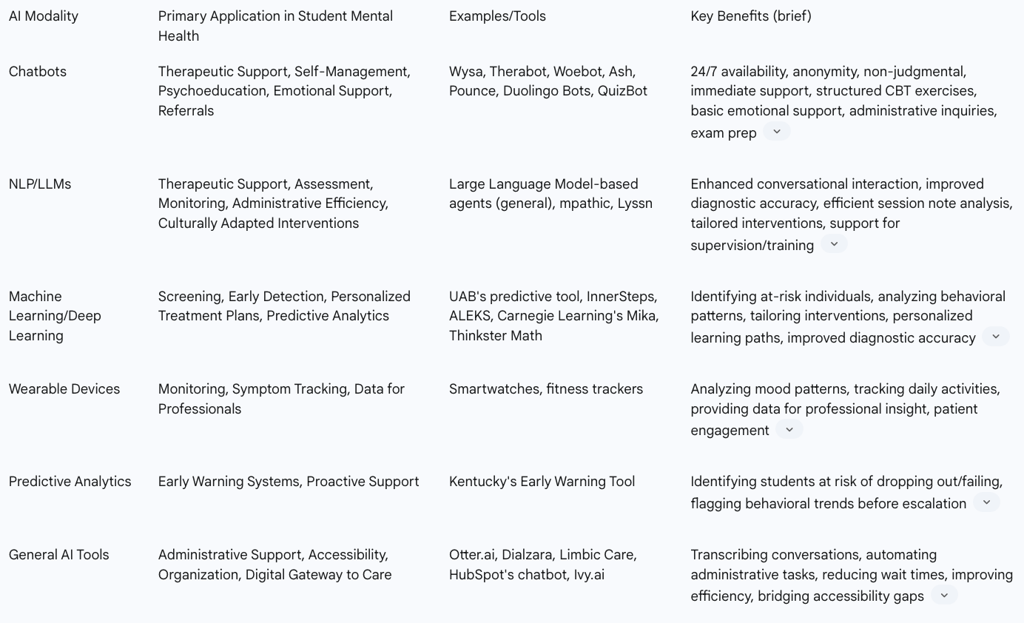

AI Modalities and Their Applications in Student Mental Health Support

Artificial intelligence is being deployed across various phases of mental health care, from initial screening to ongoing therapeutic support, primarily leveraging conversational AI and machine learning techniques. The most common AI modalities include chatbots, natural language processing (NLP) tools, machine learning (ML), deep learning models, and large language model (LLM)-based agents. These technologies are predominantly utilized for support, monitoring, and self-management purposes, rather than serving as standalone treatments. AI applications are mapped across five key phases of mental health care: pre-treatment (screening), treatment (therapeutic support), post-treatment (monitoring), clinical education, and population-level prevention.

In student-specific contexts, AI offers diverse applications:

Early Detection and Diagnosis: AI, through machine learning, can be trained to identify early signs of mental health conditions by analyzing various data points, such as speech patterns, social media activity, or data from wearable devices. This capability provides mental health professionals with additional data beyond traditional clinical analysis, offering deeper insights into an individual's daily activities and challenges, which can lead to more accurate diagnoses. For instance, researchers at the University of Alabama at Birmingham (UAB) have developed an AI tool that uses machine learning to predict college students at heightened risk of anxiety and depression disorders. This tool identifies patterns in existing school data, such as age, biological sex, years in school, race and ethnicity, and academic majors, even before students actively seek help. This proactive identification is particularly valuable for students who might not recognize their need for support or are reluctant to seek traditional care.

Therapeutic Support Tools: Virtual mental health assistants, particularly chatbots, serve as accessible outlets for expression and can facilitate referrals to human mental health professionals when appropriate. Applications like Woebot and Wysa specifically utilize Cognitive Behavioral Therapy (CBT) principles to track emotions over time, identify patterns, and suggest tools for managing specific emotions and everyday stress. Wysa, a prominent AI-driven platform, offers personalized, evidence-based CBT guidance and is available 24/7, with an option for human therapist support. Therabot focuses on delivering structured CBT exercises through a chatbot interface. Beyond direct therapeutic interactions, AI-powered storytelling apps like InnerSteps are being developed to build mental well-being in children (ages 3-12) by creating unique narratives based on their interests and mental health needs, incorporating CBT-rooted coping skills informed by child psychologists.

Personalized Treatment Plans: AI can provide real-time feedback and track patient progress, allowing interventions to be precisely tailored and continually adjusted based on individual needs and responses, even outside traditional therapy sessions. This adaptive capability enables a dynamic approach to care that can optimize treatment outcomes.

Administrative Efficiency for Professionals: AI tools can automate various administrative and back-office tasks for mental health professionals, significantly reducing their workload and freeing up valuable time for direct patient interaction and focus on more complex cases. Examples include platforms like Dialzara, which automates administrative tasks for providers , and AI transcription software for session notes, which can enhance efficiency in documentation.

Bridging Accessibility Gaps: AI tools offer round-the-clock availability, a substantial advantage over traditional campus counseling resources that typically have limited hours and operate only on weekdays. This constant availability ensures students can access support precisely when needed. Furthermore, these applications allow users to maintain anonymity, which can foster a greater sense of comfort in sharing sensitive problems and insecurities without fear of judgment. The elimination of scheduling appointments further streamlines access, enabling students to seek support on their own time and at their convenience. AI also helps reduce geographical barriers, allowing for remote support that can scale up or down based on community needs.

Support for Neurodivergent Students: AI tools can provide crucial support for predictability and transitions by sending automated routine notifications to help prepare for schedule changes, offering visual timers and countdown alerts to ease transitions, and providing sensory break reminders based on individual patterns. These personalized systems can help neurodivergent students engage with learning materials and social interactions in ways that accommodate their unique needs, supporting emotional regulation and social skills development.

The consistent emphasis on AI's utility for support, monitoring, and self-management, alongside its capacity to aid diagnosis and automate administrative tasks, indicates that AI is being developed and positioned as a force multiplier for human mental health professionals, rather than a complete replacement. This suggests that successful AI integration will necessitate a collaborative model where AI handles routine, data-intensive, or highly accessible tasks, thereby allowing human experts to dedicate their time and specialized skills to complex cases, deeper therapeutic relationships, and crisis intervention. This also signals a necessary evolution in the training and roles of mental health professionals, who will require AI literacy to effectively leverage these new tools.

The ability of AI to provide 24/7, low-cost, anonymous access and, crucially, to detect risk before an individual seeks professional help represents a significant shift in mental health support. This moves care from a predominantly reactive, crisis-driven model to a proactive, accessible, and preventative one. The broader implication is the potential to substantially reduce the severity and prevalence of mental health issues among students by lowering traditional barriers to initial engagement and proactively identifying at-risk individuals who might otherwise remain unaddressed within conventional systems. Furthermore, AI's capacity to analyze granular data such as speech patterns, social media activity, or wearable data, track mood patterns, and tailor interventions based on individual needs suggests a level of personalized care that is exceedingly difficult, if not impossible, for human professionals to achieve at scale. This goes beyond simple customization to truly adaptive and responsive support that continuously adjusts based on individual responses. This could unlock new levels of efficacy in mental health interventions by providing precision care, but it also raises complex ethical questions regarding the use of such intimate data and the potential for over-surveillance or manipulation.

Table: Overview of AI Modalities and Their Applications in Student Mental Health

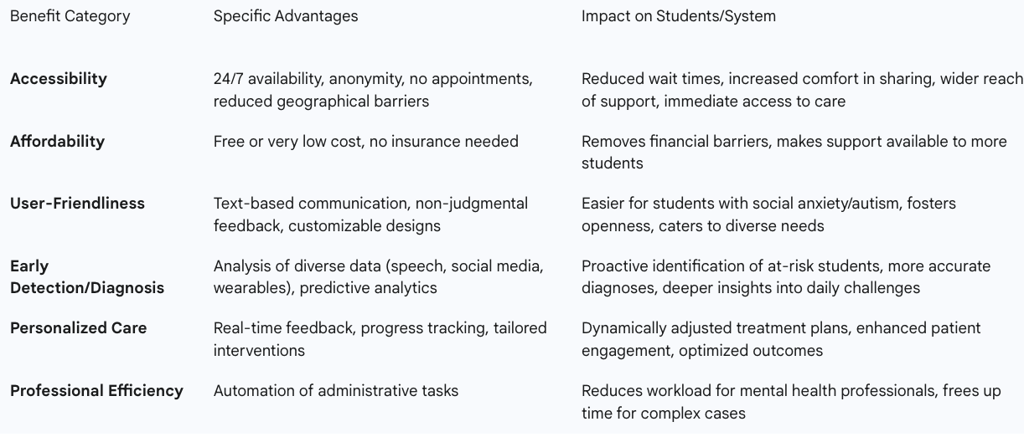

Key Benefits of AI in Enhancing Student Mental Health Accessibility and Care

Artificial Intelligence offers substantial advantages in addressing the pervasive mental health challenges faced by students, primarily by enhancing accessibility, improving affordability, and providing user-friendly interfaces. These benefits collectively bridge critical gaps in traditional mental health services.

Enhanced Accessibility: AI-generated tools are available 24/7, offering a significant advantage over traditional campus counseling resources, which typically operate with limited hours and only on weekdays. This constant availability ensures that students can access support precisely when they need it, regardless of the time of day or week. Furthermore, these applications allow users to maintain anonymity, fostering a greater sense of comfort in sharing personal problems and insecurities without fear of judgment. The elimination of scheduling appointments further streamlines access, enabling students to seek support on their own time and at their convenience. AI also plays a crucial role in reducing geographical barriers to mental health care, allowing for remote support that can be scaled up or down based on community needs. Wysa, for instance, is explicitly highlighted for its 24/7 availability, providing immediate, evidence-based support without waiting times, and is trusted by millions worldwide. This combination of features fundamentally shifts mental health support from a reactive, crisis-driven model to a proactive, accessible one. Students can engage with support at the first sign of stress or discomfort without encountering the traditional barriers of stigma, high cost, or scheduling difficulties. This implies a potential cultural shift within educational environments where seeking mental health support becomes normalized and integrated into daily life, fostering resilience and addressing concerns before they escalate into crises.

Increased Affordability: Many AI tools are either free or very low cost, making them highly appealing, especially as they often negate the need for insurance or direct payment. This cost-effectiveness makes these applications a particularly desirable option for students facing financial difficulties who still require emotional support, thereby democratizing access to mental health resources.

User-Friendly and Non-Judgmental Interaction: These AI tools primarily rely on text-based communication, which can be especially beneficial for students with social anxiety or autism, as it may make them feel more comfortable expressing their problems and experiences. Students generally acknowledge AI's non-judgmental feedback and appreciate its non-biased nature and impartiality, finding comfort in its ability to facilitate opening up without feeling like a bother. Many apps also feature customizable settings and sensory-friendly designs, increasing their accessibility for a wider range of college students with diverse needs.

Improved Early Detection and Diagnosis: AI, through machine learning, can efficiently identify early signs of mental health conditions by analyzing various data points, including speech patterns, social media activity, or wearable data. This capability provides mental health professionals with a richer dataset beyond traditional analysis, leading to deeper insights into an individual's daily activities and challenges, and ultimately, more accurate diagnoses. This is particularly impactful for students who might not realize they need help or are reluctant to seek traditional care. The finding that AI can identify at-risk students

before they visit a health provider, as demonstrated by UAB's research, highlights a critical "invisible gap" in traditional mental health systems. Many students may not recognize their need for help, may be reluctant to seek it due to stigma, or face practical barriers. AI's predictive capabilities can proactively identify these individuals, enabling targeted outreach and support that was previously impossible. This has profound implications for preventative care and ensuring equitable access to support for underserved or unrecognized populations within the student body.

Enhanced Engagement and Personalized Care: AI-driven mental health assistants, such as chatbots and trackers, can keep patients engaged in their care 24/7, whether by tracking symptoms or providing virtual support. AI can also tailor interventions based on individual needs, offering real-time feedback and progress tracking. By continuously tracking data, even outside therapy sessions, AI allows healthcare professionals to dynamically adjust treatment plans based on the patient's unique response.

Reduced Workload for Professionals: AI can automate various administrative and back-office tasks for mental health professionals, providing relief from administrative burdens and opening up more time for direct patient interaction and focus on more severe cases.

While students highly value AI's anonymity for comfort and non-judgment, making it easier to open up , research also reveals that this very anonymity can contribute to an "impersonal" nature and a lack of "deep relational engagement". This creates a paradox: the feature that effectively lowers the initial barrier to entry for some students may simultaneously limit the depth of therapeutic benefit they can receive for complex issues. This suggests that AI is highly effective for initial engagement and surface-level support but necessitates clear pathways and encouragement for students to transition to human interaction for more complex or sustained therapeutic needs.

Table: Key Benefits of AI in Student Mental Health

Critical Challenges and Risks: Student Perceptions and Clinical Concerns

Despite the promising benefits, the integration of AI into student mental health support is accompanied by significant challenges and risks, largely stemming from both student perceptions and inherent clinical and systemic concerns.

Student Perceptions of AI's Limitations

Students express considerable reservations regarding AI's current capabilities in providing comprehensive mental health support:

Lack of Empathy and Genuine Human Connection: Students consistently describe AI responses as "cold words" and articulate a profound sense that AI struggles to match the multifaceted, emotionally attuned, and adaptive responses required in therapeutic contexts. They emphasize that genuine human connection is a complex and powerful phenomenon, often achieved through physical contact and intimacy, which AI cannot replicate. This perceived lack of warmth and depth in AI interactions is a significant barrier to forming a meaningful therapeutic alliance.

Challenges in Building Trust and Therapeutic Alliance: A substantial barrier to trust, as articulated by students, is the potential for miscommunication, misinterpretation, and even misuse or manipulation by AI, leading to fears of "bad intentions" and data leaks. Students also note regulatory gaps and AI's avoidance or inadequate responses to sensitive topics like suicidal ideation as undermining trust. The concern that AI might be controlled by malicious actors further erodes confidence.

Limitations in Understanding Emotional Cues and Adaptability: Students doubt AI's ability to accurately identify and interpret nuanced human emotions or "read between the lines," believing AI is limited to programmed expressions and may make inaccurate or insensible assumptions. They observe that AI struggles to adapt to complex, unpredictable human behaviors and that its consistent, automatic responses can feel "fake and automatic," lacking genuine empathy. This rigidity limits AI's effectiveness in dynamic therapeutic interactions.

Dependence on Pre-programmed Responses: Student feedback highlights that AI often relies heavily on pre-programmed responses, lacking genuine understanding and the emotional depth needed to process emotions beyond predefined parameters. This can result in generic advice that fails to resonate with individual experiences.

Risk of Over-reliance and Therapeutic Misconception: Students have noted the risk of overestimating the therapeutic benefits of AI and underestimating its limitations, potentially leading to a "therapeutic misconception" where AI is seen as a substitute for real, professional care. This could result in unmet needs or a deterioration of mental health if AI cannot adequately respond to complex or escalating situations, inadvertently delaying or preventing students from seeking necessary human professional help.

Objective but Detached: While students appreciate AI's non-biased, judgment-free nature, finding comfort in its impartiality, they also perceive that this objectivity comes at the cost of true empathy, leading to feelings of "coldness or detachment". This creates a trade-off between the comfort of anonymity and the depth of human connection.

The consistent student feedback regarding AI's lack of empathy, genuine human connection, and adaptability points to a fundamental, rather than merely technical, limitation of current AI in replicating the nuanced, emotionally intelligent aspects of human therapeutic relationships. This suggests that for deep, complex psychological support, AI can only ever serve as a sophisticated tool, not a complete substitute for human interaction. The implication is that the focus of AI development in mental health should strategically leverage its strengths, such as data processing, accessibility, and routine tasks, while explicitly acknowledging and compensating for its inherent weaknesses in domains requiring profound emotional understanding, intuition, and dynamic human interaction.

Clinical and Systemic Concerns

Beyond student perceptions, mental health professionals and systemic analyses identify several critical risks:

Misdiagnosis and Inappropriate Interventions: Behavioral health practitioners who rely solely on AI for client assessments face a significant risk of misdiagnosis if they do not supplement AI-generated insights with their own independent assessments and judgment. Research from Stanford University indicates that AI therapy chatbots can introduce biases and failures, potentially leading to dangerous consequences, including increased stigma toward conditions like alcohol dependence and schizophrenia. Misdiagnosis can lead to inappropriate or unwarranted interventions, causing significant harm to clients.

Client Abandonment: Practitioners using AI to connect with clients must ensure timely responses to messages and postings, especially in cases of significant distress (e.g., suicidal ideation), to avoid claims of client abandonment in malpractice litigation. Failure to respond appropriately can have severe consequences.

Algorithmic Bias and Unfairness: AI's dependence on machine learning, which draws from large volumes of available data that may not be entirely representative of diverse client populations, carries a significant risk of incorporating biases related to race, ethnicity, gender, sexual orientation, and other factors. This can lead to unequal or discriminatory support for certain student groups. The National Institute of Standards and Technology (NIST) specifically notes that AI systems might increase the "speed and scale of biases" and perpetuate resultant harms. This pervasive concern about algorithmic bias stemming from unrepresentative or flawed training data is not merely a technical flaw but a direct reflection and amplification of existing societal inequalities embedded within the data used to train these systems. AI systems, by their very nature, can "perpetuate and amplify resultant harms" at an unprecedented scale. This suggests that achieving equitable AI in mental health requires not just technical fixes, such as diverse datasets, but a systemic, interdisciplinary approach that incorporates deep sociological and cultural understanding into the design and auditing processes to avoid exacerbating existing disparities, particularly for marginalized student groups. This is a complex societal challenge that technology can either mitigate or worsen.

Data Privacy and Confidentiality Breaches: Storing sensitive patient data in the cloud raises significant privacy and confidentiality concerns, particularly in contexts where sensitive topics might be discussed. Clinicians are advised to ask critical questions about data access, future access, whether data is used for AI model training, and if patients can retract their data. The fear of surveillance or loss of control over personal information can contribute to increased anxiety among students.

Digital Fatigue, Loneliness, and Technostress: The increasing reliance on AI tools, especially for communication and entertainment, raises concerns about digital fatigue, isolation, anxiety, and technostress. Excessive screen time, often driven by AI-powered engagement, can contribute to these issues.

Reduced Face-to-Face Interactions: Continuous engagement with AI technologies may diminish interpersonal skills and emotional intelligence, affecting students' ability to form social connections and relax without digital stimuli, potentially leading to social isolation.

Job Displacement Concerns: The automation of administrative and instructional tasks through AI raises concerns about potential job displacement for certain roles in higher education, leading to anxiety and stress among support staff.

AI Hallucinations and Misinformation: Generative AI tools, such as large language models, have been known to invent false references and even fabricate data (AI hallucinations), posing significant challenges for academic integrity and the provision of reliable information in educational and mental health contexts.

While AI's accessibility and anonymity are widely touted as significant benefits , research also reveals a causal link to potential negative outcomes. These very features can lead to a "detached" relationship and foster a "therapeutic misconception" where students might overestimate AI's capabilities, potentially delaying or preventing them from seeking necessary human professional help for more complex or severe issues. This suggests a critical risk: the ease of AI access might inadvertently create a false sense of adequate support, thereby hindering engagement with more appropriate, human-led interventions. Clear disclaimers, robust referral pathways, and comprehensive education on AI's limitations are therefore crucial.

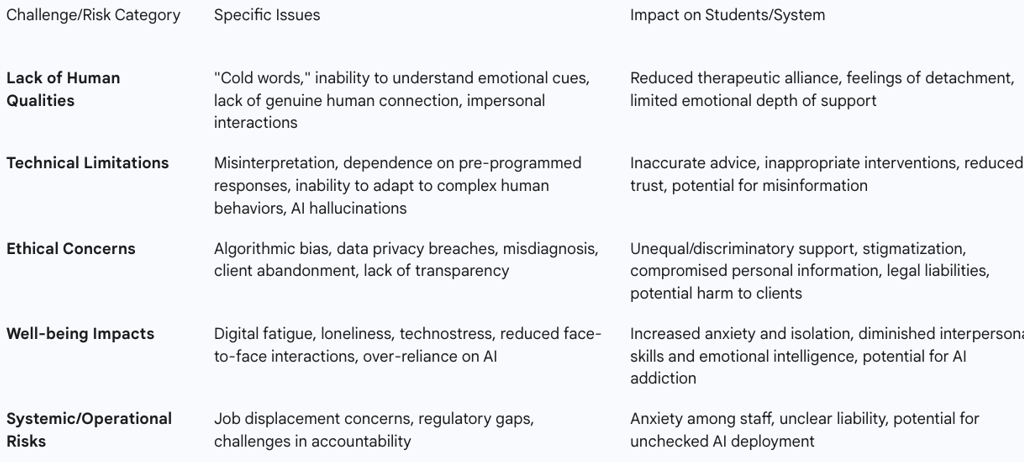

Table: Major Challenges and Risks of AI in Student Mental Health

Ethical and Legal Implications: Data Privacy, Bias, and Accountability

The integration of AI into mental health support systems for students introduces a complex array of ethical and legal considerations, with data privacy, algorithmic bias, and accountability standing as paramount concerns. Navigating these issues is crucial for ensuring the responsible and beneficial deployment of AI.

Informed Consent and Client Autonomy: A primary ethical concern revolves around obtaining truly informed consent from university students. Many students may not fully comprehend how their sensitive mental health data will be used, stored, or shared, potentially leading to violations of their autonomy. Explicitly requesting consent for such sensitive data collection could inadvertently increase anxiety or stigma, potentially discouraging students from seeking essential help. Behavioral health practitioners have a fundamental duty to explain the potential benefits and risks of AI use as part of the informed consent process and to respect clients' judgment about whether to accept or decline its use in treatment.

Data Privacy and Confidentiality: The confidentiality of mental health data is of utmost importance due to its highly personal and sensitive nature. Risks include data breaches, unauthorized access, and misuse by third parties. Universities and developers must implement strong encryption, robust access controls, and effective anonymization techniques to protect sensitive data. However, complete anonymization can be challenging, especially when data is used for personalized interventions, raising ethical questions about re-identification risks. The fear of surveillance or loss of control over personal information can significantly contribute to increased anxiety among students, potentially undermining the very well-being AI aims to support.

Compliance with Data Protection Laws: Educational institutions deploying digital mental health support systems must navigate and comply with a complex web of data protection laws. Key regulations include the Family Educational Rights and Privacy Act (FERPA) in the U.S., which governs student records; the Health Insurance Portability and Accountability Act (HIPAA), which regulates protected health information; and the General Data Protection Regulation (GDPR) in the European Union, which imposes strict requirements on data processing, consent, and cross-border data transfers. Compliance involves ensuring data minimization, purpose limitation, and secure storage, with severe penalties for violations. Universities must also navigate potential jurisdictional conflicts when using cloud-based or multinational platforms. For K-12 settings, the Children's Online Privacy Protection Act (COPPA) is an additional critical consideration.

Student Rights and Control Over Personal Data: Students retain key rights over their mental health data, including the right to access, correct, and request deletion of their information under laws like GDPR and FERPA. However, universities face challenges in fulfilling these requests efficiently, particularly when data is processed by third-party vendors. Clear procedures for handling data subject requests, along with transparent policies on data retention periods and conditions for lawful processing, must be established. Without robust mechanisms, institutions risk undermining trust and facing regulatory sanctions.

Algorithmic Bias and Fairness: AI-driven mental health systems rely on algorithms that may inadvertently perpetuate biases. Factors such as underrepresented data, cultural insensitivity, or flawed training datasets can skew outcomes, leading to unequal or discriminatory support for certain student groups. Ensuring equity in algorithmic decision-making requires continuous auditing, diverse data representation, and inclusive design practices. NIST notes that AI systems might increase the "speed and scale of biases" and perpetuate resultant harms.

Transparency and Explainability: The ethical deployment of AI systems depends heavily on their transparency and explainability. This involves providing relevant information about an AI system, its operations, and outputs to users or "AI actors," tailored to their role and understanding. This can include details on design decisions, training data, model structure, intended use, and deployment decisions. Transparency is essential for addressing issues like incorrect AI outputs and ensures accountability for AI outcomes.

Liability and Accountability: In cases of data breaches or non-compliance, liability may be distributed among universities, mental health service providers, and third-party vendors, depending on contractual agreements and regulatory frameworks. Universities could face fines, lawsuits, and reputational damage if negligence is proven, while vendors may be held accountable for security failures. Proactive measures—such as regular audits, breach notification protocols, and clear liability clauses in contracts—are essential to mitigate risks. UNESCO emphasizes that AI systems should be auditable and traceable, with oversight, impact assessment, and due diligence mechanisms in place.

Human Oversight and Determination: Ethical guidelines consistently stress that AI systems should not displace ultimate human responsibility and accountability. Human intervention is necessary if AI errors are undetected or uncorrectable by the system itself, ensuring a critical human-in-the-loop component.

The repeated concerns expressed by students and professionals regarding privacy, data misuse, lack of transparency, and potential for manipulation directly contribute to a significant trust deficit. If students "feel paranoid about their information being leaked" , even the most technologically advanced and potentially beneficial AI tool will face substantial barriers to widespread adoption and effective utilization. This indicates that technical security measures alone are insufficient; institutions must actively cultivate trust through radical transparency, clear and accessible communication about data governance practices, and demonstrable accountability to overcome this fundamental deficit.

The existence of comprehensive, internationally recognized frameworks like UNESCO's and NIST's, alongside specific legal acts such as FERPA, HIPAA, and GDPR, indicates a global consensus that AI innovation, particularly in sensitive domains like mental health, must be guided by ethical principles from its inception, rather than being an afterthought. This suggests that the rapid advancement of AI necessitates a proactive, iterative regulatory process to prevent potential harms and ensure that technological progress aligns with fundamental human values and rights. These frameworks provide a crucial blueprint for responsible development, deployment, and ongoing governance.

Algorithmic bias, which stems from unrepresentative data or flawed training datasets, is not merely a technical imperfection but directly leads to unequal or discriminatory support. This establishes a clear causal chain where biased data inputs result in inequitable health outcomes, thereby exacerbating existing societal disparities. The profound implication is that achieving equitable AI in mental health requires not just technical fixes, such as diversifying datasets, but a systemic, interdisciplinary approach to data collection and curation that prioritizes diversity and inclusion, alongside continuous human oversight to identify and correct emergent biases. This challenge is deeply rooted in societal structures and requires more than just technological solutions.

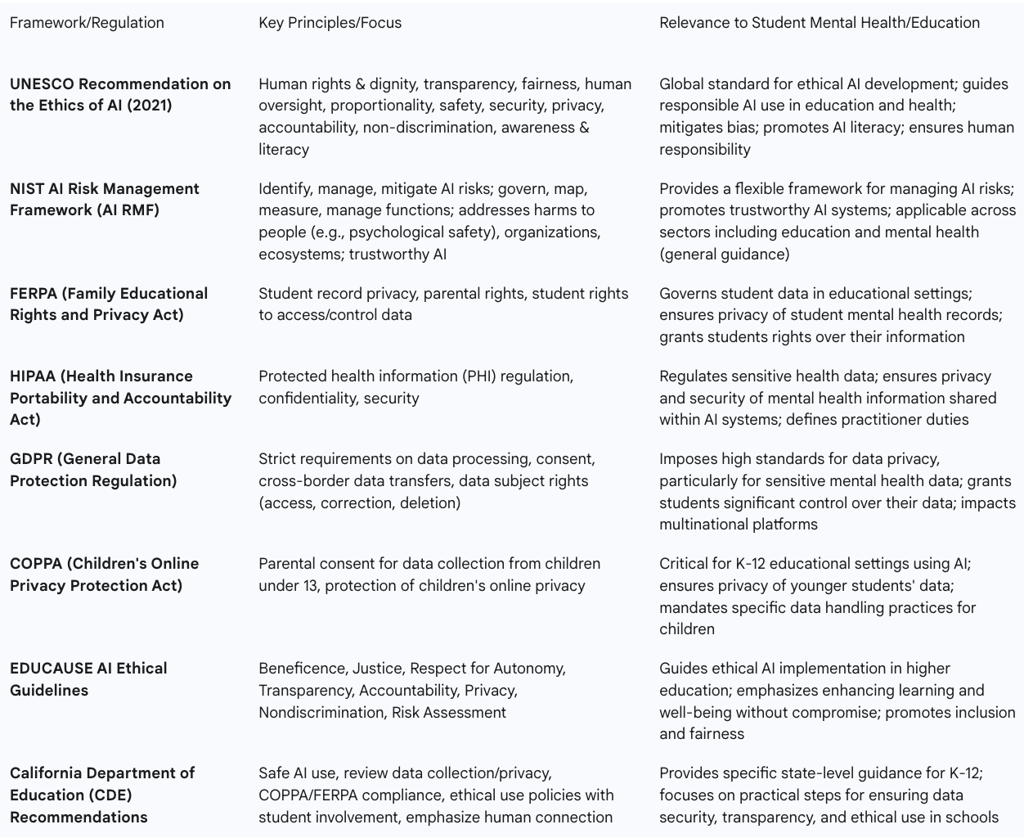

Table: Ethical and Legal Frameworks for AI in Mental Health and Education

Current Research, Emerging Trends, and Future Directions

The integration of AI into student mental health and well-being is a rapidly evolving field, yet current literature indicates that its comprehensive impact remains largely underexplored. There is a notable scarcity of rigorous experimental and clinical studies in this area, underscoring a critical need for more empirical investigations to fully understand AI's long-term effects within higher education contexts. This gap between rapid AI development and slower empirical validation presents a significant challenge for responsible implementation, highlighting the necessity for proactive research to inform policy and practice.

Key Research Areas and Questions

Ongoing research is focused on several critical areas:

Long-term Effects: Further investigation is urgently needed to examine the long-term effects of data-driven mental health interventions on student well-being, their perceptions of privacy, and the overall sustainability of AI-based support systems.

Digital Fatigue, Loneliness, and Technostress: Studies are increasingly focusing on concerns about excessive screen time, over-reliance on AI tools, and reduced face-to-face interactions, which may lead to digital fatigue, social isolation, anxiety, and technostress among students. More work is needed on the intertwined issues of social isolation and the potential for addiction to AI technologies, particularly in conjunction with social media use.

Impact on Interpersonal Skills and Emotional Intelligence: Research is exploring how over-reliance on AI may diminish students' interpersonal skills and emotional intelligence, affecting their ability to form social connections and engage in meaningful real-world interactions.

Safe AI Integration and Trust: Ongoing research continues to explore how to safely integrate AI, including fundamental questions about trust, accountability, and what happens when technology makes mistakes.

Data Privacy and Surveillance Impacts: Future studies must directly address data privacy, surveillance concerns, and their specific impact on students' mental well-being, particularly given the sensitive nature of mental health data.

Human-AI Interaction Dynamics: Psychologists are actively researching human-technology interaction to ensure safe and effective AI use, investigating whether people are more likely to trust AI agents based on their social etiquette and how to maintain human engagement in decision-making when AI systems operate rapidly.

Culturally Adapted Interventions: A promising area of research involves leveraging large language models (LLMs) to help providers tailor mental health interventions for specific populations with significantly less effort than in the past. This goes beyond simple translation to adapting protocols with relevant cultural metaphors for diverse groups, thereby improving health equity.

Emerging Trends

Several trends indicate the evolving landscape of AI in student mental health:

AI Literacy as an Imperative: Equipping students with AI literacy skills is becoming essential for navigating a technology-driven world. This goes beyond technical understanding to include a deeper grasp of AI's ethical, societal, and cultural implications. Educators are central to this endeavor, tasked with introducing AI concepts, guiding ethical discussions, and helping students apply AI to real-world problems. This necessitates professional development and a multidisciplinary approach to integrating AI across the curriculum.

AI in Clinical Practice: Mental health professionals are increasingly exploring how LLMs can enhance efficiency, expand access to mental health support, and even deepen the therapeutic relationship. This includes the use of chatbots for assessment (e.g., depression, suicide risk), AI transcription software for session notes, analysis of wearable device data to understand patient's daily lives, and AI-powered tools for supervision and training, including simulations for therapists-in-training. This indicates a redefinition of roles for educators and mental health professionals, shifting towards AI literacy, ethical guidance, and focusing on uniquely human aspects of care.

Pilot Programs in Educational Settings: Various pilot programs are underway to integrate AI responsibly. In K-12 settings, efforts focus on instruction and support services, such as AI-powered tutoring (Indiana, Iowa) and student data management for identifying at-risk students (Kentucky, New Mexico). At the university level, pilot studies are assessing chatbot effectiveness in psychiatric inpatient care (ChatGPT) and for Mindfulness-Based Stress Reduction (MBSR) programs for students with depressive symptoms (Hong Kong). Dartmouth researchers conducted a clinical trial of Therabot, finding significant symptom improvements for depression and anxiety. AI literacy pilots, such as Operation HOPE and Georgia State University's initiative, are working to build technical, entrepreneurial, and financial literacy skills in underserved youth to prepare them for an AI-powered workforce.

Dedicated AI-Powered Apps for Students: Applications specifically designed for mental health support are emerging. Examples include Ash, an AI designed for therapy that offers a private, judgment-free space for sharing thoughts, providing personalized insights, and supporting long-term growth. InnerSteps is an AI-powered storytelling app for children (ages 3-12) that builds unique narratives incorporating CBT-rooted coping skills.

Predictive Analytics for At-Risk Students: Tools like the one developed by UAB researchers are demonstrating strong predictive accuracy in identifying college students at heightened risk of anxiety and depression disorders from the general student population, even before they seek help.

Future Directions

The future trajectory of AI in student mental health points towards several critical areas:

Balanced AI Integration: Educational institutions should aim for a balanced approach that combines AI's efficiency with human-centered pedagogical methods to foster interpersonal skills and emotional intelligence. This involves leveraging AI as a tool to enhance, rather than replace, human connection.

Policy Development and Training: There is a pressing need for comprehensive policies addressing data privacy, ethical AI use, and robust training programs to improve technological literacy for both students and educators.

Evolution of Teacher Identity: AI integration necessitates a redefinition of teacher identity. Students perceive a shift in their primary source for academic support from teachers to AI tools, leading to a diminished centrality of teachers for certain tasks. However, students consistently emphasize the irreplaceable human element, valuing teacher empathy, humor, cultural understanding, and the capacity to build emotional connections. Effective teachers are seen as those who strategically use AI while maintaining classroom authority, critical reflection, and student engagement. This reframes the teacher's role as an orchestrator of learning, transforming AI into a dialogic resource rather than a substitute authority, ensuring students think and reflect instead of merely copying.

Standardizing Ethical Guidelines and Strengthening Accountability: Moving forward, policy development should focus on standardizing ethical guidelines, strengthening accountability mechanisms, and enhancing student involvement in data governance.

More Empirical Investigations: The scarcity of experimental studies highlights the nascent stage of research, underscoring the need for more empirical investigations, including randomized controlled trials, to fully understand AI's long-term impact on student well-being in higher education contexts.

The rapid advancement of AI technologies and their widespread adoption in educational environments create a dynamic where the gap between technological capabilities and empirical understanding of their impact on student well-being is widening. This situation necessitates a proactive and substantial increase in rigorous research to inform responsible policy and practice. Furthermore, the integration of AI requires a holistic ecosystem approach, involving multi-stakeholder collaboration across education, mental health, and policy domains. This comprehensive strategy is essential to foster positive outcomes and effectively mitigate the inherent risks, ensuring that AI contributes constructively to the mental health and well-being of the next generation.

Conclusions

The integration of Artificial Intelligence into student mental health and well-being presents a landscape of profound opportunities alongside significant challenges. AI tools offer unprecedented accessibility, affordability, and capabilities for early detection and personalized support, addressing critical gaps in traditional mental health services. The ability of AI to provide 24/7, anonymous, and non-judgmental support can normalize help-seeking behaviors and proactively identify at-risk students who might otherwise remain unaddressed. This represents a fundamental shift towards a more proactive and preventative mental health culture within educational environments.

However, the current limitations of AI, particularly its struggle with genuine empathy, building trust, and adapting to complex human emotions, are consistently voiced by students and recognized by professionals. These limitations highlight a fundamental human-AI gap, indicating that AI serves most effectively as an augmentative tool rather than a complete substitute for human interaction. Furthermore, critical ethical and legal considerations, including data privacy, the potential for algorithmic bias, and accountability, must be meticulously addressed. The pervasive concern regarding data misuse and the amplification of societal biases through AI systems underscore the necessity for robust ethical frameworks and transparent governance.

To maximize the benefits and mitigate the risks, a human-centered approach is paramount. This involves leveraging AI as a force multiplier for mental health professionals, enabling them to focus on complex cases while AI handles routine and data-intensive tasks. It also necessitates a redefinition of roles for educators and mental health practitioners, emphasizing AI literacy, ethical guidance, and the cultivation of uniquely human aspects of care. Ongoing, rigorous empirical research is crucial to understand the long-term effects of AI on student well-being, particularly concerning digital fatigue, social isolation, and technostress. Policy development must be adaptive and iterative, ensuring that ethical guidelines, data protection laws (like FERPA, HIPAA, GDPR, COPPA), and accountability mechanisms keep pace with technological advancements.

Ultimately, the successful integration of AI in student mental health requires a collaborative, multi-stakeholder approach that prioritizes student well-being, fosters trust through transparency, and ensures that technological innovation aligns with fundamental human values and rights. By embracing AI thoughtfully and ethically, educational institutions can enhance mental health support systems, making them more accessible, efficient, and responsive to the diverse needs of students.