Ethical Challenges in AI Storytelling

The emergence of AI storytelling presents a dual reality: immense potential for innovation and profound ethical challenges. As AI systems become increasingly sophisticated in generating narratives, they bring forth critical considerations across several dimensions.

The integration of artificial intelligence into storytelling represents a transformative shift, offering unparalleled capabilities for content generation, personalization, and enhanced user engagement. However, this evolution is accompanied by a complex array of ethical challenges that demand urgent attention from senior executives and policymakers. Primary concerns include the pervasive issue of bias and misrepresentation embedded within AI-generated narratives, the escalating threat posed by AI-driven misinformation and deepfakes to truth and democratic processes, and the intricate dilemmas surrounding intellectual property rights and authorship in an AI-augmented creative landscape. Furthermore, the socio-economic implications for human creativity and employment within the creative industries present a critical area of consideration. Addressing these multifaceted challenges necessitates a human-centric approach, robust regulatory frameworks, and a commitment to transparency, accountability, and continuous ethical development to ensure that AI storytelling serves to enrich, rather than undermine, human society and creative expression.

The Evolving Landscape of AI Storytelling

Defining AI Storytelling: Capabilities and Mechanisms

AI-powered storytelling signifies an innovative convergence of artificial intelligence, particularly Natural Language Processing (NLP) and Machine Learning (ML), with the traditional art of narrative creation. This advanced technology employs sophisticated algorithms to assist across the entire storytelling spectrum, encompassing everything from initial idea generation and content creation to personalizing narratives and significantly enhancing user engagement.

The operational core of AI storytelling involves algorithms that meticulously analyze vast historical artistic and linguistic data, learn intricate styles, and subsequently generate engaging visual and textual narratives. The process typically commences with establishing foundational parameters, such as defining the genre, setting, main characters, and overarching theme. This is followed by crafting compelling prompts that are engaging yet specific enough to guide the AI while allowing for creative freedom. Dynamic story development is a key feature, where AI tools expand initial prompts into a series of events, dialogues, and plot twists, all while adhering to the parameters set by the human creator. This interactive process allows human storytellers to introduce new elements, modify existing ones, or steer the plot in different directions, ensuring alignment with their creative vision through iterative feedback and refinement. Notable tools like Tome AI, Canva's Magic Write, and Storly specialize in generating and documenting stories, offering functionalities such as finding suitable synonyms, modifying sentence structure, and improving overall readability.

The current paradigm of AI in narrative generation is best characterized by a remarkable interplay between human creativity and artificial intelligence. AI functions as a powerful co-author, brainstorming partner, and versatile assistant, aiding writers in overcoming creative blocks, refining dialogue, expanding world-building with imaginative settings, and enhancing emotional resonance. However, it is crucial to acknowledge AI's inherent limitations in areas demanding deep psychological complexity, emotional nuance, dynamic character development, symbolic complexity, and true originality. AI often tends to fall back on clichés and predictable patterns without substantial human intervention. The iterative collaborative process mandates human review, editing, and feedback to align AI-generated content with the human creator's vision, thereby preserving the story's human essence.

Diverse Applications Across Industries (Marketing, Education, Entertainment, Journalism)

AI storytelling demonstrates remarkable versatility and widespread impact through its applications across numerous sectors. In marketing, AI excels at crafting hyper-targeted campaigns designed to resonate deeply with individual users. This is achieved by personalizing trailers, thumbnails, and even entire narrative arcs based on demographic preferences and intricate consumption patterns. Furthermore, AI-powered digital assistants and intelligent chatbots are increasingly deployed to engage audiences, handle support inquiries, and drive conversions.

Within the educational domain, AI generates interactive and adaptive learning materials that significantly enhance engagement. This includes tailoring narratives to specific user profiles, incorporating elements like names, places, and interests, which demonstrably boosts user engagement and satisfaction.

AI's influence permeates nearly every stage of the creative process in entertainment, from initial ideation to final distribution. It powers innovative music production platforms such as AIVA and Soundful, assists in scriptwriting, and revolutionizes post-production workflows through tools like Adobe Sensei and Runway ML for automated scene detection, trimming, color correction, audio editing, and motion tracking. The technology also facilitates voice synthesis, the creation of virtual actors, and the generation of deepfake content. Moreover, text-to-video AI generators drastically accelerate pre-visualization by transforming written scripts or prompts into animated storyboards.

In journalism, AI is actively utilized to generate real-time reports, summarize events, and mine structured data for newsworthy trends. This allows human journalists to focus on deeper investigations and analytical reporting. Prominent media organizations like Bloomberg and Reuters leverage natural language generation tools to produce financial and sports content at scale. Beyond these major sectors, AI storytelling extends to specialized domains. It can be leveraged for therapeutic storytelling by personalizing narratives to an individual's specific needs, offering a unique and engaging experience. Additionally, AI can make documenting travel adventures seamless, creating engaging narratives from experiences by analyzing travel data to generate personalized guides and captivating accounts.

A critical observation in the evolving landscape of AI in creative fields reveals a significant paradox regarding its role. While AI is consistently presented as a tool that enhances human creativity, helps overcome creative blocks, and automates repetitive tasks—thereby allowing humans to focus on higher-level creative aspects —this perspective exists in tension with concerns about job displacement. Reports indicate that AI is on track to replace millions of workers globally, with "entry-level white-collar jobs" and specific creative roles like "content writers and copywriters" being particularly vulnerable. The notion that "good enough" AI writing costs "pennies compared to human salaries" directly points towards automation and replacement rather than mere assistance. This suggests a fundamental restructuring of the creative workforce, where high-level creative direction and strategic oversight may be augmented, but more routine content generation tasks are increasingly susceptible to full automation. This shift prompts a critical re-evaluation of what constitutes "creativity" in an AI-augmented economy and how different facets of creative work will be economically valued and sustained, emphasizing a move in value from execution to conceptualization and curation.

Another significant consideration in AI storytelling is the latent risk of creative homogenization, despite the pursuit of efficiency. While AI's capacity to learn from "vast datasets of existing literature," "historical artistic data," and mimic "various writing styles and genres" is presented as a benefit for consistency and scalability , there is a noted weakness in its "Lack of True Originality," often falling back on "clichés and predictable patterns". This concern is further echoed by warnings against "content homogenisation". The pursuit of efficiency and hyper-personalization, while commercially appealing, carries the subtle risk of inadvertently leading to a standardization of creative output. If AI models primarily reproduce patterns from their training data, the "new" content they generate, despite being unique in its specific arrangement, may lack genuine novelty, unexpected stylistic deviations, or truly disruptive creative concepts. This could subtly stifle authentic artistic innovation and lead to a less diverse and more predictable cultural landscape over time, fundamentally impacting the very "art of narrative creation" that AI aims to enhance. The ethical concern is that by optimizing for "what resonates deeply" based on past consumption data, the industry might inadvertently narrow the scope of future creative expression, creating a self-reinforcing feedback loop of predictable narratives and a diminished capacity for true artistic breakthrough.

Core Ethical Challenges in AI Storytelling

Bias, Stereotyping, and Misrepresentation in Narratives

Sources of Bias in AI Training Data and Algorithms

AI models are inherently trained on enormous datasets compiled from publicly available online text and images, and as a result, they inevitably inherit the biases present in these sources and the societal constructs that produced them. This pervasive issue encompasses biases embedded within language itself, the selection and labeling of data, and the algorithms' design. A primary source of bias stems from training datasets often lacking diverse and accurately labeled representations of underrepresented groups, leading to misclassification and overgeneralization by AI models. For instance, if training data does not sufficiently include images of individuals with varied skin tones and features, the AI may fail to recognize them correctly, defaulting to broader, often inaccurate, racial classifications.

Furthermore, many datasets, despite their scale, rely on human-labeled images. These human annotators can inadvertently introduce their inherent biases or a lack of knowledge about specific ethnic features into the data. Similarly, unintentional adjustments made during transcription to align spoken words with common linguistic norms or stereotypes can subtly embed biases into the training data. Generative AI models are predominantly influenced by a white, male, American perspective, and heavily skewed towards the English language due to its overwhelming prevalence in online content. This can lead to preferential treatment of language patterns associated with dominant social groups while devaluing or depreciating those linked to marginalized communities. Moreover, systemic biases, which are deeply rooted in long-used procedures and practices that favor some people and disadvantage others, are often reflected and perpetuated by AI systems. The design of AI systems also involves assumptions made by researchers and system designers regarding statistical models, which can affect what data is counted or omitted. Additionally, AI models are trained on material from particular time periods, meaning they may lack material from before or after a certain point, leading to temporal biases that can result in outdated or anachronistic outputs.

Impact on Representation and Exclusionary Norms

When biases are embedded within AI systems, they can perpetuate existing inequalities and disproportionately affect marginalized communities, leading to profoundly unethical outcomes. This manifests as misclassification of individuals, an erosion of their unique identity, and their exclusion from opportunities within AI innovation and representation. AI can reinforce harmful societal stereotypes. For example, when prompted to generate images of "IT expert" or "engineer," AI models often reflect societal biases by disproportionately illustrating men in STEM positions. Gender discrimination is also evident, as exemplified by Amazon's AI recruiting tool, which was found to favor male candidates by penalizing resumes from women.

AI demonstrates linguistic and dialect-based biases. Studies have shown that AI detectors are more likely to flag the work of non-native English speakers as AI-generated due to their more rigid language use. Similarly, AI models have consistently assigned speakers of African American English (AAE) to less-educated careers, even when their qualifications were equivalent to standard American English speakers. Voice recognition software also struggles significantly with diverse accents and is nearly twice as likely to misunderstand Black Americans' speech compared to White Americans'. The research of Joy Buolamwini, highlighted in "Coded Bias," revealed alarming disparities in facial recognition technologies, which failed to accurately detect darker-skinned faces or misgendered individuals, leading to severe real-world consequences, including wrongful arrests. The Dutch childcare benefits scandal serves as a stark example of how biased algorithms can lead to severe emotional distress, financial ruin, and unjust outcomes for thousands of families based on discriminatory profiling.

A significant challenge arises from the observation that AI models, trained on vast datasets of online text and images, inherently contain the biases of the society that produced them. This establishes the foundational input of existing bias. A critical concern is that subtle biases present in the training data can be amplified during the generative process, potentially leading to the creation of new biases not explicitly present in the original data. This dynamic suggests a dangerous amplification loop: AI does not merely reflect existing societal biases; it can exaggerate them and even generate novel forms of discrimination. This creates a self-reinforcing feedback mechanism that entrenches and intensifies discrimination at an accelerated pace. The ethical challenge therefore extends beyond simply identifying and removing existing biases—a complex task in itself—to preventing the AI from becoming a dynamic engine of new and unforeseen forms of discrimination, which are inherently more difficult to predict, detect, and mitigate. If AI storytelling propagates these amplified or novel biases, it could actively contribute to a more prejudiced society by normalizing and disseminating skewed narratives on an unprecedented scale and with persuasive power.

Another critical implication is the "objectivity illusion" that often surrounds AI systems. Despite being computational and seemingly impartial, AI consistently produces biased outcomes. When AI-generated narratives or decisions are subsequently revealed to be biased—as demonstrated by numerous real-world examples —it not only causes direct harm to individuals but also fundamentally undermines public trust in the technology itself and, by extension, the institutions deploying it. This erosion of trust can have far-reaching societal consequences, impacting everything from public acceptance of AI in critical sectors like healthcare and law enforcement to the overall credibility of information sources. For AI storytelling, if its narratives are perceived as inherently biased or untrustworthy, its powerful capacity to engage and persuade could be severely compromised or, worse, weaponized to manipulate rather than genuinely inform, leading to a skeptical, disengaged, and potentially fractured audience.

Authenticity, Truthfulness, and the Rise of Misinformation/Deepfakes

AI's Role in Fabricated Narratives and Propaganda

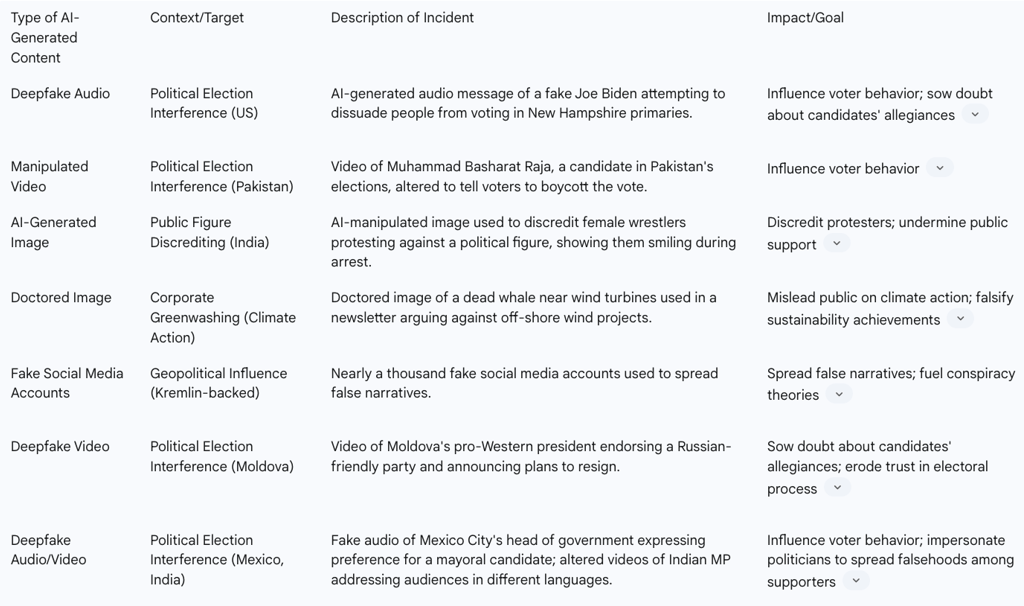

Advanced AI tools have significantly lowered the barrier for creating highly realistic fake images, videos, and news content that is increasingly difficult to distinguish from genuine information. Generative AI, in particular, can produce convincing fake video and audio content, often mimicking real individuals with stunning realism. AI-powered disinformation is fundamentally different from misinformation; it is intentionally fabricated and shared specifically to mislead and manipulate. These deceptive narratives are frequently part of organized, large-scale campaigns, as evidenced by operations like the Kremlin-backed initiative that used nearly a thousand fake social media accounts to spread false narratives.

AI can effectively "weaponize storytelling" by exploiting deep-seated cultural nuances, symbols, and sentiments to craft messages that resonate profoundly with specific target audiences, thereby significantly enhancing their persuasive power. Furthermore, AI enables the creation of manufactured identities and personas that are designed to gain trust, blend into target communities, or amplify persuasive content.

Erosion of Trust and Societal Implications

The widespread proliferation of AI-generated deepfakes is identified as a major global risk, fundamentally threatening to erode public trust in the authenticity of what people see and hear. This phenomenon exacerbates the "global post-truth crisis" because these realistic fakes betray our most innate senses of sight and sound, making it challenging to discern reality. AI-generated disinformation poses a direct threat to democratic processes, actively distorting facts, relativizing truth in favor of extremist ideals, creating severe political polarizations, and inciting hatred, racism, and misogyny. Concrete examples include AI-generated audio messages designed to dissuade voting, altered videos of political candidates, and manipulated images used to discredit public figures or protesters.

The emergence of "deepfake greenwashing" presents a significant economic and ethical threat. This deceptive practice involves using AI to falsely demonstrate commitment to sustainability or fabricate environmental achievements, making it increasingly difficult to differentiate genuine climate action from deceptive claims. This ultimately erodes public trust in critical climate initiatives and corporate responsibility. Humans are inherently "wired to process the world through stories," making compelling narratives exceptionally powerful tools for persuasion that can override skepticism more effectively than mere statistics. This fundamental human susceptibility is precisely what AI-generated disinformation exploits, particularly in regions with lower formal education or limited internet literacy, where individuals may lack the tools to discern nuanced falsehoods.

The capacity of AI to create "realistic content" , "exploit cultural nuances" , and generate "manufactured identities" leverages and amplifies this inherent human susceptibility. The numerous examples provided demonstrate these capabilities being deployed for sophisticated political manipulation and the discrediting of individuals. This dynamic highlights how AI fundamentally escalates storytelling from a persuasive art form to a weaponized tool for mass manipulation. The ethical challenge is no longer confined to simple "fake news" but extends to highly sophisticated, culturally-aware, and emotionally resonant disinformation campaigns that exploit fundamental human cognitive processes. This means that effective countermeasures against misinformation must go beyond traditional fact-checking; they require a deeper, AI-driven understanding of narrative structures, persona analysis, and cultural literacy to detect and counteract these advanced threats. The unprecedented scale and speed of AI-generated narratives render traditional human-led countermeasures insufficient, necessitating a proactive, adaptive, and technologically-assisted defense strategy to protect societal discourse.

The pervasive nature of hyper-realistic AI-generated content also creates a societal state of "reality decay." As AI makes fake content "hard to distinguish from reality" and "betrays our most innate senses of sight and sound," it leads to a profound "erosion of the public's trust in what they see and hear". This makes "reality increasingly complex and difficult to understand" , highlighting a systemic impact beyond individual instances of deception. This constant compulsion to question the authenticity of virtually all information imposes an immense cognitive burden on citizens, potentially leading to either heightened skepticism and cynicism or, conversely, a greater susceptibility to narratives that confirm existing biases as a coping mechanism. The ethical dilemma is not solely about the creation of fake content but its systemic impact on collective epistemology—how society ascertains truth—and the potential mental toll on individuals navigating a perpetually blurred line between truth and fabrication. This could result in societal paralysis in critical decision-making or, more dangerously, a retreat into insular echo chambers where only "trusted" (and often ideologically aligned) sources are consumed, fragmenting public discourse and undermining shared understanding.

Table: Key Instances of AI-Generated Disinformation Campaigns

Intellectual Property, Copyright, and Authorship Dilemmas

Challenges to Traditional Copyright Law and Human Authorship

The advent of AI-generated content fundamentally challenges traditional intellectual property (IP) laws, blurring established lines around authorship, ownership, and originality. Existing legal frameworks often do not clearly grant rights to AI-generated content, leading to significant legal uncertainty for creators and businesses alike. In the United States, the foundation of copyright law rests on the premise that authors must be human. Both U.S. courts and the U.S. Copyright Office (USCO) have consistently interpreted constitutional and statutory language to exclude non-human creators from copyright protection. Works must "owe their origin to a human agent" and demonstrate "meaningful human involvement" or "creative control over the work's expression" to qualify for registration.

The USCO has explicitly clarified that merely entering prompts, even highly detailed ones, into an AI model does not provide sufficient human control to establish the user as the author of the resulting output. The office likens prompts to "unprotectible ideas" or "general directions" given to an artist, implying a lack of direct expressive control. In cases where a work includes both human and AI-generated content, only the human contributions are potentially copyrightable. Applicants are required to disclose and disclaim AI-generated parts when applying for copyright registration. If AI autonomously generates material without meaningful human input, current doctrine suggests it is uncopyrightable and effectively falls into the public domain.

IP laws concerning AI-generated content vary significantly across jurisdictions, creating a complex legal landscape. For example, while the U.S. maintains a strict human authorship requirement, the United Kingdom explicitly recognizes "computer-generated" works as copyrightable, assigning authorship to "the person by whom the arrangements necessary for the creation of the work are undertaken," though these works typically receive shorter terms of protection. These inconsistencies complicate international enforcement and can hinder innovation by creating legal uncertainty.

Fair Use, Training Data, and Compensation for Creators

A major ethical and legal challenge arises from the fact that the training process for AI models often involves using vast amounts of copyrighted materials—including books, images, music, and code—without explicit permission or compensation for the original creators. This practice raises significant concerns about fair use and potential copyright infringement.

Courts have engaged in extensive debate over whether the use of copyrighted content to train Large Language Models constitutes "transformative use." Some rulings, such as in Bartz v. Anthropic and Kadrey, have deemed this use "highly" or "spectacularly" transformative, reasoning that the purpose is to extract statistical patterns and relationships between words, not to distribute or display the original works themselves. The impact of AI training on the potential market for original copyrighted works remains a contentious point. While some courts have found no actionable market harm from the use of training data, others express concern that AI could pose a "real and viable threat" to the market for original creative works, potentially disincentivizing authors from creating new content. The argument regarding the loss of a potential licensing market for AI training is a key point of contention in these legal disputes.

The ethical debate extends to whether AI output constitutes plagiarism or copyright infringement if it is similar to or inspired by the training data. Some argue that if the AI does not reproduce copyrightable elements, it should not be considered plagiarism, while others focus on the perceived lack of human "work" involved in the AI's generation process. Legally, the focus is often on the output itself rather than the process by which it was created. A significant ethical imperative is the demand for fair compensation for creators. A substantial majority of writers (90%) believe authors should be compensated for the use of their books in training generative AI. Writers' groups and other content creators actively advocate for the evolution of legal frameworks to establish fair compensation models for their works used in this manner. Proposed solutions include the creation of an "opt-in global dataset pool" where creators can register their work and AI developers pay transparent royalties based on usage, akin to existing music licensing models.

A fundamental ethical and economic conflict arises from the relationship between AI and existing human-created content. AI models are trained on vast amounts of existing human-created content, frequently without explicit consent or compensation. This leads to a perception among creators that AI companies are "exploiting" their work and "benefiting from someone else's work without putting in the work yourself". Conversely, some counter-arguments suggest that "most of the value is being generated by people other than the artists, including the genAI team and the end users" , implying new value creation. The current legal ambiguity exacerbates this tension. This dynamic reveals a fundamental conflict over the source and allocation of value in the AI creative ecosystem. Is AI primarily a mechanism for extracting latent value from existing human creativity without fair recompense, or is it a powerful engine for creating entirely new value that justifies its consumption of training data? Without clear and equitable compensation mechanisms, this could lead to a "tragedy of the commons" for creative works, where the foundational material for AI development is devalued, and human creators are disincentivized from producing new content. This ultimately threatens the long-term sustainability and diversity of the creative industries that AI itself relies upon for its training data and future innovation.

Conclusions

The emergence of AI storytelling presents a dual reality: immense potential for innovation and profound ethical challenges. As AI systems become increasingly sophisticated in generating narratives, they bring forth critical considerations across several dimensions.

Firstly, the pervasive nature of bias, stereotyping, and misrepresentation within AI-generated narratives is a significant concern. AI models, trained on historically biased datasets, do not merely reflect existing societal prejudices but can amplify and even generate novel forms of discrimination. This creates a dangerous feedback loop that entrenches inequalities and undermines fair representation. The public's perception of AI as an objective tool, when confronted with biased outputs, leads to a fundamental erosion of trust in the technology and the institutions deploying it. Addressing this requires continuous vigilance, diverse training data, robust bias detection, and human oversight to prevent AI from becoming an engine of amplified prejudice.

Secondly, the rise of misinformation and deepfakes poses an existential threat to authenticity and truthfulness in public discourse. AI's ability to create hyper-realistic fabricated content, often weaponizing storytelling techniques and exploiting human cognitive biases, accelerates the "post-truth crisis." This escalation transforms storytelling into a tool for mass manipulation, impacting democratic processes and eroding collective trust in information. The constant need to verify information imposes a significant cognitive burden on individuals, potentially leading to societal fragmentation and a retreat into echo chambers. Countering this demands advanced AI-driven detection mechanisms that understand narrative structures and cultural nuances, alongside enhanced media literacy for the general public.

Finally, the intricate dilemmas surrounding intellectual property, copyright, and authorship in AI storytelling highlight a fundamental conflict over value. Traditional copyright laws, predicated on human authorship, struggle to accommodate AI-generated content, leading to legal uncertainty and a potential "public domain" for works created autonomously by machines. The use of vast amounts of copyrighted material for AI training without explicit consent or fair compensation raises critical questions about "value extraction" versus "value creation." Without equitable compensation models, there is a risk of devaluing human creativity and disincentivizing artists, threatening the long-term sustainability and diversity of the creative industries.

In conclusion, while AI offers unprecedented opportunities to augment human creativity and enhance storytelling, its ethical deployment is paramount. The challenges of bias, misinformation, and intellectual property demand a concerted, multi-stakeholder effort involving developers, policymakers, legal experts, and creative communities. A human-centric approach, prioritizing transparency, accountability, and the preservation of human dignity and creative rights, is essential to navigate this evolving landscape and ensure that AI storytelling ultimately serves to enrich humanity.